At about the time of Bangladesh's independence, none other than a student and scholar of History, Arnold Toynbee, who had already written about the growth and decline of 26 civilisations in an epic volume string, could prophetically describe the fate of what we today call the "digital revolution," in his Study of History summation. "The encounter between a new culture-element and an old culture-pattern is always governed by the same set of circumstances," he observed of all the technological developments until that time. Only among the wizened professors would "digital revolution" have resonated then, if so briefly and subordinately. Nonetheless, what Toynbee saw in all of them, that "the introduction of the new elements condemns the old pattern," might have entered and exited many ears without raising the kind of hullabaloo that "digital revolution" does today. He continued to his prophetic conclusion: "the visitant, which in another context is either harmless or even creative, will actually deal deadly destruction." In the Cold War then, or amid the war on terrorism since, no eyebrows would be raised.

With what is called the "digital revolution" today, we automatically shout "voila!" as Toynbee's completely unrelated prophecy hits our reality on its very head. In our haste and wonder, we automatically get off on the wrong footing, draw the wrong conclusions, make the wrong implications, and find comfort that many, many others in the vast human population have made almost identical interpretations: any day now, we hum to ourselves, our new inventions will descend and catapult us out of our comfort zone into a chaotic "other" world unless we "do something," that "something" almost always being "master the technology newly introduced." So engrossed in our own technology, we shudder to hear of "new" ones. Life, fortunately, does not move in the same straight line as our thought process and deep yearnings go.

If "artificial intelligence" (AI) is the ghost we have coined to haunt us because it is the stuff robots, drones, and other such contraptions can do far better than mortal humans, we fail to acknowledge how the manipulations of numbers, which be at the heart of all such contraptions, has been going on for almost a century. Historians and scientists disagree on the dates, but they all attribute the advent of the computer to the "Third Industrial Revolution," which a vast majority of them trade back to the 1930s. John von Neumann and Oskar Morgenstern's game theory appeared in fits and starts at that time (as "parlor games" for the former in 1928, then blossoming with the latter to a monumental 1944 magnum opus volume, Theory of Games and Economic Behavior). Their discovery did not win any Nobel prize (there was no existing category they could have fitted into, with Economics emerging only in 1969; but Physicist Nobel Laureate Eugene Wigner described von Neumann as being the only one in the supposedly human race, "fully awake"); nonetheless game theory influenced many air force logistics that helped win World War II.

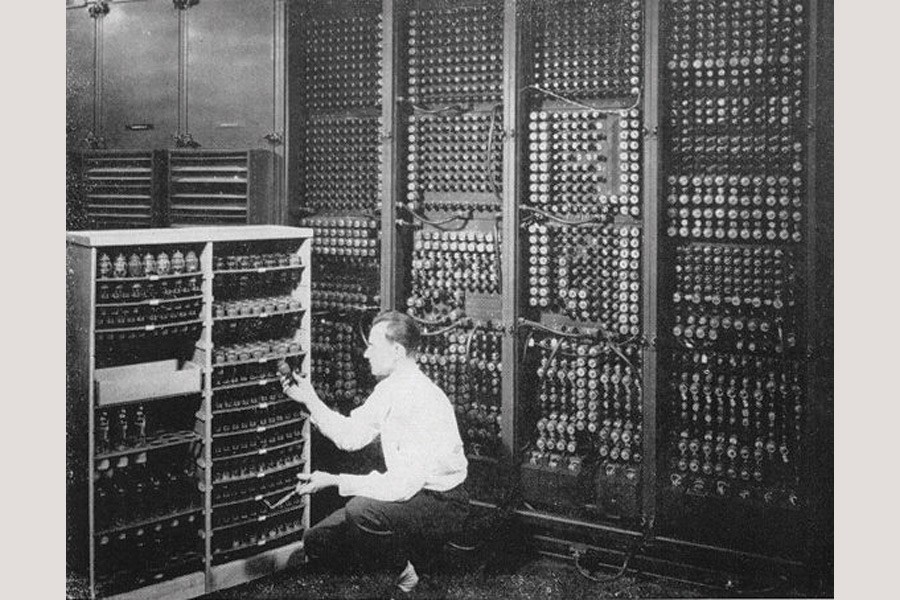

Those same exercises with number manipulation led to the Electronic Numerical Integrator and Computer (ENIAC), the world's first computer, in the University of Pennsylvania's Moore School of Electronic Engineering in February 1946 by Ursinus College Professor John W. Mauchly and Penn graduate J. Presper Eckert, Jr. (in a course, "Engineering, Science, Management War Training" funded by the US government). Von Neumann served as ENIAC consultant from the start. How that large room-sized contraption (20 feet by 30, weighing 30 tons), has been brought to a hand-held contraption not even a pound in weight today involved as many number manipulations and reconfigurations that had been going on since 1928, with robots, drones, and other "Fourth Industrial Revolution" compatriots we have today, as offshoots. We did not raise as many eyebrows when we see these contraptions in comics and movies, but now they are "set" to so-called "replace us," we act like an IFO (identified flying object) invasion is forthcoming.

Once when the United States began losing its relative global advantage in producing so many manufactured goods, "Japan bashing" was coined to fire up human adrenalin, one being utilised by the same country against China today, and by many other countries with a competitive advantage slipping to yet other countries. We may either have run out of countries to thrust our negative emotions against, or simply those emotions themselves have been completely exhausted, but the current conversion of new technological contraption (those robots, drones, and the like) into the "they," as in the "us" versus "them" equation, would truly be chuckling if the man-versus-machine reference point was not causing so much inter-personal damage.

The "digital revolution" has not been a revolution of, by, or with digits, as the series belabours to present, but that a "revolution" is underway is the one we need to address fast and furiously if we are to prevent that ruin that we had conjured and attributed to the digital revolution. The revolution will be as much social as mental as our false hopes dwell upon machines today.

On the social front, many jobs will evaporate, but let us not fill our minds with images of waking up one bright morning, drawing the curtains, and finding no more doctors, engineers, nurses, teachers, and other professionals left: robots have taken over, and ostensibly awaiting us at the front doorstep to begin instructing us what to prepare for breakfast, how to dress up, where to go to train for the new "age," and instruct us what job we must now train for. These have been happening routinely and glacially for a long while for us to suddenly blame the robot family.

Robots, drones, and the like have to be created by humans, and humans must remain to program them, redirect their capacities, and nurture them like they did their own children. Sure some of the necessary skills will be intellectually more demanding, but since today's youths, born as they were into the world of computers, Internet, and social manipulation through Facebook and WhatsApp, have shown greater and more savvy skills-learning aptitudes than their counterparts did in classrooms before with pen, paper, and blackboard before, they already have the skill-substructure commensurate to a robotic future sewn into their "internal system." Likewise for their parents/grandparents when they adjusted to the Second Industrial Revolution's assembly-line string of job expectations, and their forebears adjusting to the first mechanical invention evicting them from farmlands, or the Spinning Jenny 250-odd years ago snatching away their sewing jobs.

That social revolution will tear families, but this process began much before, when we began abandoning extended families to cultivate nuclear counterparts, and now even letting the family construction slowly evaporate into history what with the advent of single-parent households. Our mindset alteration will be revolutionary. We have been so accustomed to the "survival of the fittest" phrase applied physically, evident in terms like "machismo" and "great powers," that we forget how today the power of the mind is changing all we see before us, so much so that we better boogey creating new commensurate terms and adding them into our vocabularies and dictionaries.

This is what the "digital revolution" boils down to; and yes, it will have boundaries, though no longer geographical or issue-based (like the economics ministry differing from the security branch): based on skills and training, these new boundaries demand we displace ethnicity, gender, race, and religion as discriminatory yardsticks since new, more intellectual and virtual ones, rather than physical or visible counterparts, will rule the day.

What this "digital revolution" series does is to explore the panoply opened up by this overarching opening piece.

Dr. Imtiaz A. Hussain is Professor & Head of the Department of Global Studies & Governance at Independent University, Bangladesh.